I finally managed to have bootsplash patched into the SLAX kernel using the patches from here:

http://www.uli-eckhardt.de/bootsplash/index.en.shtml

Just need to edit one of the patches to remove some stuff from “Patch for 3.4 bootsplash-3.4.diff.bz2”. It will still work with later kernel versions.

After recompiling the kernel, to get the bootsplash working isn’t very clear on most websites. With the old initrd or initrd.gz, one would just do

splash -s -f [full path to bootsplash config] >> initrd

This unfortunately doesn’t work as the cpio created initramfs gets messed up if you do this. After going though a lot of websites with methods that didn’t work, I managed to look at the bootsplash patches and noted the portion under the “init” which basically says it will attempt to open the file /bootsplash. So that kind of implies that the bootsplash image should be in the initramfs. A little more Googling and I confirmed this on least two more websites. It was like finding gold let me tell you. A bit of experimenting prooved the point and I have bootsplash with the initramfs running now.

Lots of people have said bootsplash is the past and with KMS it will be obsoleted, but I still think its the easiest to get working and for now it gives an option for those who want to brand their distros but don’t want to start compiling something else like fbsplash or plymouth. These are the steps I took:

- Get the bootsplash user space utilities from from any of the links here: http://www.filewatcher.com/m/bootsplash-3.1.tar.bz2.112416-0.html

- Compile and install the utilities. You should have a /etc/bootsplash and the splash tools in /sbin now.

- Get your verbose and silent splash images or theme and config into /etc/bootsplash/themes

- Generate the init bootsplash from the config with:

/sbin/splash -s -f /etc/bootsplash/themes/<themename>/config/<configfile>.cfg > /tmp/bootsplash

- Extract the initramfs to someplace (eg:/tmp/inittree) using:

mkdir /tmp/inittree; cd /tmp/inittreexz -dc <path-to-slax-initrfs>/initramfs | cpio -i

- Copy the whole of /etc/bootsplash to the /tmp/inittree/ and copy /tmp/bootsplash to /tmp/inittree (so its in the root of the inittree):

cd /etc; cp -R /etc/bootsplash /tmp/inittree/etccp /tmp/bootsplash /tmp/inittree

- Recompress initrfs with:

cd /tmp/inittree; find . -print | cpio -o -H newc 2>/dev/null | xz -f --extreme > /tmp/initramfs

- If this is for SLAX, the initramfs should be initrfs.img and you should then replace the stock Slax vmlinuz and initrfs.img with the patched kernel and the new initrfs.img. The initrfs.img increases by 30KB and vmlinuz increases by 10KB.

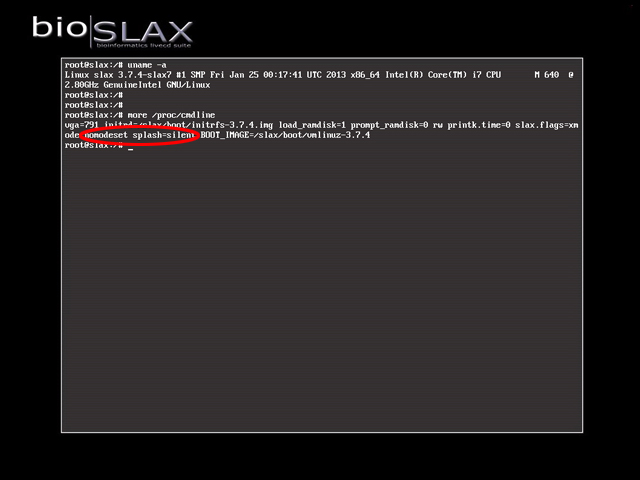

- Make sure to edit the boot options and set vga=791 to get the bootsplash to show

The bootsplash will only show up like the old behaviour until the KMS kicks in, then it will dissapear. To keep the bootsplash around and have graphic background for your virtual terminals, use kernel parameter “nomodeset” (which disables KMS) at boot.

I’ve been playing around with nomodeset and a lot of different graphic cards and even without KMS, the X resolution is fine, especially for the new distros. The only card I’m constantly having trouble with is the Intel GM945. Thats the only one I need to use KMS modeset with so far.

I figure there are other cards that give issues too, but I find a majority still work well without KMS, especially with the newer distros.

Based on this, to make a SLAX based distro more appealing, I would propose the default to be booted with “nomodeset” to maintain the bootsplash and graphic background virtual terminals and have the KMS as a fall back mode for those troublesome cards. In other words, another option “KMS” other than “Persistent changes”, “Graphical Desktop”, “Copy to Ram”, etc. on the boot page of SLAX. Thats what I’ll be doing for the new BioSLAX anyway.