Spify title, I know – but we’re not talking police raids by the dozens here. I’m discussing how to get the most out of a RAID. Its an age old debate, should you go with a RAID1 or perhaps a RAID5 or RAID6? What are the benefits? Well all this depends on exactly what you’re trying to acheive with the RAID setup. The pertinent questions one has to answer is:

1) Do you want more space?

2) Do you want redundancy?

3) Do you want to protect your data from drive faulure?

4) Do you mind your write speeds not being as fast as they should be off a single drive?

Points (2) and (3) more or less tie into the same thing though the experienced crowd may be crying foul on point (3) “RAID is not a backup!”. And yes, it is NOT a backup, but it allows the less critical some sembelence of one.

To start the ball rolling, lets look at the RAID levels that are commonly in play.

| RAID LEVEL | DESCRIPTION | BENEFITS | DRAWBACKS |

| 0 | Stripping of data across multiple disks | Good Performance | No Redundancy |

| 1 | Duplicating data over equivalently sized disks | Good read/write performance with redundancy | Expensive – need twice the required space |

| 5 | Data written in blocks across all drives, single parity block | Good read performance for small, random I/O requests, has redundancy | Write performance and read performance for large, sequential I/O request is poor, failure of more than one disk simultaneously will render all data lost |

| 6 | Data written in blocks across all drives, dual parity block | Good read performance for small, random I/O requests, has redundancy | Write performance and read performance for large, sequential I/O request is poor, failure of more than two disks simultaneously will render all data lost |

If you want more detailed info, check this link out.

Most home users would go for RAID 0, RAID1 or combo RAID10. RAID0 maximizes the capacity so thats an obvious reason, but why choose RAID1, even though it reduces your capacity by 50% immediately? Well lets face it, as home users, one wouldn’t spend a bomb on a Synology or QNAP 4 to 8 bay RAID enclosure that costs easily over $1000 without any disks (or even $600 for a WD EX4 4 bay system). No, home users would simply get a 2 bay model which conveniently brings your choices down to only RAID0 or RAID1. If you’re a capacity freak, you’ll want all your disks striped together and if you are worried about backups, then sacrificing 50% of the total capacity isn’t anything you’d be worried about. The folks with more money to burn would get a 4 bay system and stripe 2 disks together to get a larger space then mirror it to another 2 disks, forming your RAID 10.

For a more professional setup, we consider capacity, redundancy, data protection and write access. Yeah, thats basically all 4 points. There is simply no compromise for critical corporate data. We need to maximize space so more data can be stored, create redundancy so that data is always available, make sure data can be rebuilt should drives fail as well ensure that access to the system is fast enough not to cause delays and finally have a constantly updated copy of the data that can be copied from if the main data is corrupted. That last point is the REAL backup. For all those points, these are the instances we employ RAID5 or RAID6 with a combination of RAID1 or RAID0, to give RAID50, RAID51, RAID60 and RAID61. On top of these we employ fast cache (which is usually an array of SSD disks to take data in quickly and hold it while its written to the actual RAID setup) and then a full back up solution like de-dup which is offered by a number of vendors. Yes, gone are the days when we use a super slow DDS2/3/4 tape library to do backups – seriously you want to spend 96 hours backing up 60TB of data when you can churn out 200TB of data under 24? Not to mention how long it will take to restore that data.

Maximizing the points is something that has to be done carefully. You don’t want to maximize capacity and realize that you don’t have enough data protection or redundancy, or vice versa. Never mind the unrecoverable errors (URE) for RAID5 and RAID6 setups (mostly rubbish by the way – mostly) and problems with larger disks in the calculations required to read and write your data. With so many considerations, it makes one wonder why we even consider anything other than a RAID10. Well cost is a major factor – fortunately cheap storage pods, like BackBlaze are all the rage these days. JBOD (Just a Bunch Of Disks) enclosures which can interconnect to each other, forming an ever growing storage. Now just because they are cheap doesn’t mean you’re going to go all crazy and start getting a bunch of these for RAID10. Be smart and look at how to avoid the headache of RAID management particular to your own requirements. There is no way to tell you how to do this, its just something you have to figure out, but I can go with an example.

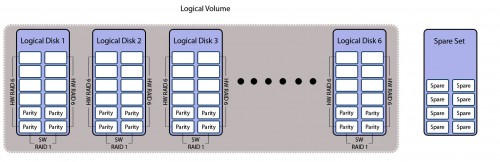

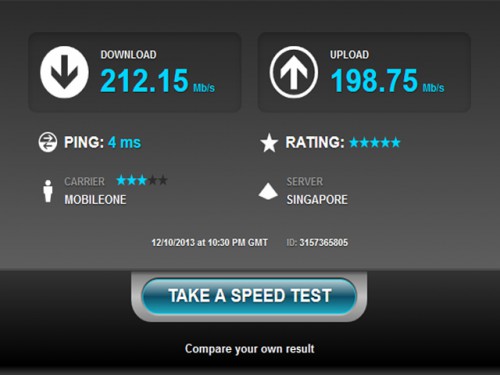

We needed a large storage capacity, a datastore of sorts, something in the region of over 80TB (for a start) with the ability to grow as and when necessary without affecting current data. The data being written to it had to have very strong redundancy (research data that has to be kept for a minimum of 7 years), speeds of read and write were irrelevant. We opted for 80 x 4TB disks giving us a raw capacity of 320TB. Now with best practices we could have had 10 x 8 drive hardware RAID6 forming 10 logical disks (most straight forward) in a single logical volume which would be ~200TB after formatting, but that would have seriously screwed us over if any two disks failed in a RAID set simultaneously – don’t say it doesn’t happen, it does and it has!We could have gone with 5 x 8 drive software RAID6 forming 5 logical disks with a hardware RAID1 on the whole thing, forming 1 logical volumes, which would have given us better protection but still iffy and would reduced our capacity to roughly ~100TB after formatting, but would have impeded our ability to grow the capacity easily given that the RAID1 would require logical disks of equal size. What we eventually ended up with was a 6 x hardware RAID6 with 12 disks, each disk on a software RAID1, forming 6 logical disks within a single logical volume with 8 global hot spares. A hardware RAID forming logical disks, with a software RAID for each physical disk, all in a logical volume (hence the title). Nothing straight forward about this solution, let me tell you that much, but it will save a whole lot of time and trouble compared the others.

As far as protection goes, its doesn’t get better than this and it also allows for more logical disks to be added without considering the need to have equivalent disk capacities as the other logical disks. So long as the disks within the logical disk forming the software RAID1 were of equivalent size, it didn’t matter much. What was the trade off of this super-protected-easy-to-grow setup? Space. Pure and simple. By utilizing this setup, we had 6 x RAID6 (2 x parity) mirrored which worked out to be only 16TB x 6 or ~90TB after formatting. Did it serve our purposes? More than 80TB? Check. Able to grow easily without affecting current data? Check. Strong redundancy? Check.

In fact the other possibilities would also have given us a reasonable solution, but issue is in the management of the solution, especially when failure occurs. Most folks, I find, don’t seem to take what needs to be done in failure situations into consideration. They only look at the working scenario and say “we have redundancy, so we’re ok”. They don’t consider how long it takes to rebuild, what are their contingencies during rebuild so that data is still accessible, do you have to port data out before adding more disks, etc. For us, we don’t like to take chances with research data, so even with this super-protected system, we still have a smaller system that we sync data from this 90TB datastore to. The size of the actual writable data is only about 30TB at the moment, so a smaller (60TB), less protected system (RAID51) holding an exact copy will give us a fail over option if we need to work on the actual datastore and the users won’t even know the main datastore is down.

Main point – know what you need and don’t always take the most straight forward solution. It might not seem like its worth the trouble, because things are always smooth when systems work, but its what happens when they don’t and what you have put in place to mitigate those scenarios, that separates the real techs from the wannabes. Which are you?

UPDATE:

Some folks have been telling me my diagram is wrong and that the parity shouldn’t be on individual disks. Yes, that is right, they shouldn’t. In modern systems the parity is distributed along all the disks, but I was using single disks in presenting the parity data so that it was immediately apparent how much usable disk space was available. Think of the diagram as a logical, not physical representation.